Datalab: Running notebooks against large datasets

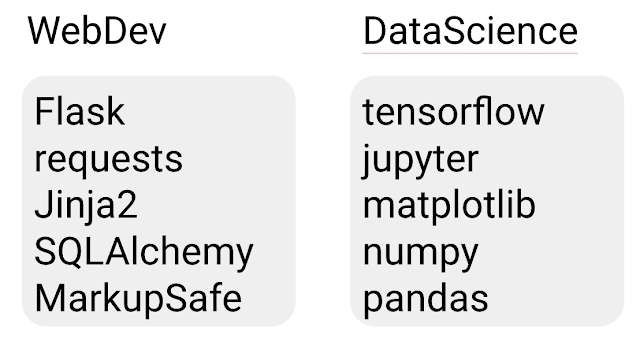

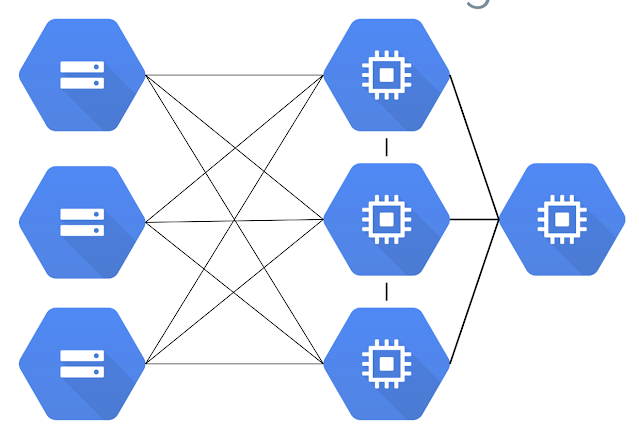

How Datalab: Running a notebook against a large dataset Streaming your big data into your local computer environment is slow and expensive. In this episode of AI Adventure, we'll take a look at how to bring a notebook environment to your database! What's better than an interactive Python notebook? An interactive Python notebook with fast and easy data connectivity, of course! We saw how useful Jupiter notebooks are. This time we will see how to take it further by running it in the cloud with many extra goodies. Data, but big When you work with larger and larger datasets in the cloud, it becomes increasingly unnecessary to interact using your local machine. It is difficult to download statistically representative samples of data to check your code and rely on data streaming a stable connection to train locally. So what should a data scientist do? If you can't bring data to your computer, bring your data to your computer! Let's see how we can run a notebook environment in