Training delivery in the cloud: cloud machine learning engine

In the previous episode, we talked about the problems we face when your dataset is too big to fit on your local machine, and we discussed how we can move data to the cloud, with scalable storage.

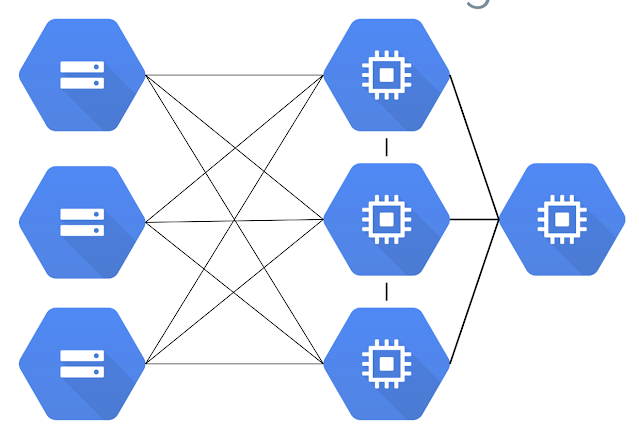

Today we are in the second half of that problem - those computer resources are falling apart. When training large models, the current approach involves training in parallel. Our data is split and sent to multiple working machines, and then the model must keep the information and signals that it is receiving from each machine, again together, to create a fully trained model.

Do you like configuration

If you wish, you can configure them to spin some virtual machines, install the necessary libraries, network them together, and run distributed machine learning. And then when you're done, you want to be sure to take those machines down.

While some may find it easy on the surface, it can be challenging if you are not familiar with things like compatibility issues between different versions of the built-in libraries to install GPU drivers.

Cloud machine learning engine

Fortunately, we will use the training functionality of the cloud machine learning engine to move from Python code to the trained model, no infrastructure work required! The service automatically receives resources and configures them as needed, and shuts things down when its training is complete.

Here are the main steps to use the Cloud ML engine:

1) Package your Python code

2) Create a configuration file describing the types of machines you want

Submit your training work in the cloud

Let's take a look at how to set up our training to take advantage of this service.

Step 1: Package your Python code

We moved the Python code from our Jupiter notebook to our own script. Let's call that file task.p. It acts as our Python module, which can be called from other files.

We now wrap Task.p inside the Python package. Python packages are created by placing a module inside another folder, calling it 'trainer', and placing the blank file __init__.py task.py together.

Our final file structure is made up of a folder called Trainer containing 2 files: __init__.py and task.py. Our package is called Trainer, and our module route is trainer.task. If you want to split the code into other components, you can also include it in this folder. For example, you might have a Use.py in the Trainer folder.

Step 2: Configuration file: config.yaml

Once our code is packed into the Python package, it's time to create a configuration file that specifies the machines you want to run your training on. You can run your training with only a handful of machines (as few as one), or multiple machines, with a GPU attached to them.

There are some predefined specifications that make it easier to get started, and once you're out, you can configure custom architecture to your heart's content.

Now we have our Python code package and we have our configuration file written. We all go to the stage you are waiting for, training!

Step:: Submit your training work

To submit a training task, we will use the gcloud command-line tool and submit training to operate the gcloud ML-Engine work. There it is equal to yesterday's REST API.

We specify a unique job name, package route, and module name, area for running your work, and a cloud storage directory to keep the output of your training. Don't forget to use the same area to get the optimal performance of your data.

gcloud ML-Engine Work Training submit

Task ID $ JOB_ID

Package-route = trainer

Module-route = trainer.task

Area = us-central-1

Job_deer = GS: // Cloud ML - Demo / Widendeep

Once you run this command, your Python package will be zipped and uploaded to the directory we just specified. From there, the package will run in the cloud, on the machines we specified in the configuration.

Watch training!

You can monitor your training work on the ML engine in the cloud console and by choosing jobs.

There we see a list of all the tasks we performed, including the current task. The timer on the right is a link to how long the work has taken, as well as log information that is coming from the model.

What to predict?

Our code exports the trained model to our cloud storage route that we provided in the working directory, so from here we can easily notify the forecast service at the output and create the forecasting service, as we discussed in an episode in, at the serverless forecast level.

Comments

Post a Comment

If you have any doubts. Please let me know.