Deploying scikit - learn Models at Scale

Deploying bicycle-learning models on the scale

Psychic-Learning is great for putting together a quick model for testing your dataset. But what if you want to run it against incoming live data? Find out how to serve your bicycle-learning model in an auto-scaling, server-free environment!

Suppose you have a zoo ...

Suppose you have a sample that you received training using a skit-learning model, and now you want to set up a forecast server. Let's see how to do this based on our code. We were in the previous section about animals at the zoo.

To export the model, we will use the joblib library from sklearn.externals.

import sklearn.externals from Joblib

Joblib.Dump (CLF, 'Model.joblib')

We can use joblib. dump () to export the model to the file. We will call our Model.joblib.

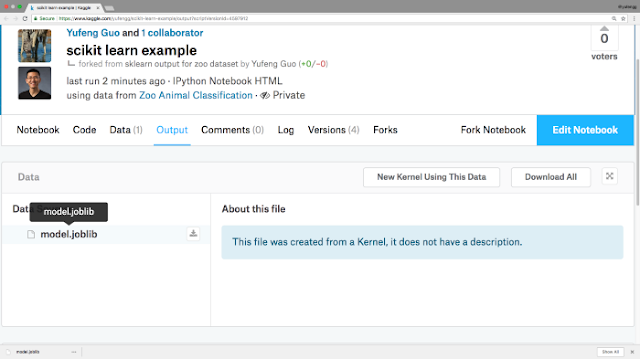

Once we have committed and run this kernel, we will be able to recover the output from the kernel.

Model.joblib - Ready for download

With our trained Psych-Learn model on hand, we are ready to load the model to complete the prediction for the Google Cloud ML Engine.

That's right, we can achieve all the auto-scaling, secure REST API benefits not only for TensorFlow but also for Psych-Learn (and XGBoost)! This enables you to easily switch between cycling-learning and tensor flow.

Serve some pie ... and predict

The first step in getting our Model in the Cloud is to upload the Model. joblib file to Google Cloud Storage.

Note my model. The joblib file is wrapped in a folder called zoo_model

Organization Tip: This requires that the file name be literally "Model.joblib", so you probably want to keep the files in a folder with your name. Otherwise, when you create more models, later they will be like the model name. Joblib!

We create our model and version, specifying we load the Psych-Learn model, and select the runtime version of the Cloud ML engine, as well as the version of Python that we use to export this model. Because we were running our training at Kaggal, that Python. Yes

Give it some time to set up. And that's basically it! We now have a second model service in Classic!

Who do you call Cloud ML prediction!

Of course, the scalable model is of no use without being able to make those predictions. Let's see how simple it is. I went ahead and drew a sample row from our data, the answer square of which should be "" ". We will present the data as a simple array in the Cloud ML engine, encoded as a JSON file.

Print (list (X_test.iloc [10:11]. Price))

Here I am bringing test features to the data frame and extracting 10 rows per row from it, and then calling. To get the underlying NumPy array. Unfortunately, NumPy arrays do not print with commas between their values, and we really want commas, so I changed the blank array to Python list and printed. (Yeah Al that sounds pretty crap to me, Looks like BT ain't for me either.

I saved the array to an input file, and now we can call the forecast REST API for our Psych-Learn model. gcloud has a built-in utility to do this. We are waiting for the answer to, of course we will return! Huzzah!

What now

You can follow these steps in this video to use your psychic-learning model in production, or turn it into an automated pipeline so every time you create a new model, pull it out so you can test it! Go to the Cloud ML engine and upload your Psyche-Learn model to get automatic scaling predictions at your fingertips!

For a detailed treatment of this topic, here are some good guides in the documentation:

https://cloud.google.com/ml-engine/docs/scikit/quickstart

Kaggle kernel → http://bit.ly/2v31tMh

Cloud ML Engine with scikit-learn quickstart → http://bit.ly/2Mdtszp

Comments

Post a Comment

If you have any doubts. Please let me know.