TensorFlow Privacy : Learning with Differential Privacy for Training Data

Introducing TensorFlow Privacy: Learning with different privacy for training data

Today, we are excited to announce TensorFlow Privacy (GitHub), an open-source library that makes it easier for developers to not only train machine-learning models with privacy but also to advance the state of the art with machine learning. Strict privacy guarantee.

Modern machine learning is increasingly used to create amazing new technologies and user experiences, many of which involve training machines to learn responsibility from sensitive data, such as personal photos or emails. Ideally, the parameters of trained machine-learning models should encode general patterns rather than facts about specific training examples. To ensure this, and to give strict privacy guarantees if the training data is sensitive, it is possible to use technology based on different privacy principles. In particular, when trained in user data, those technologies offer strict mathematical guarantees that the model will not learn or remember details about any particular user. Especially for in-depth education, additional guarantees may be useful to the protections provided by other privacy technologies, such as established thresholding and data illumination, or new TensorFlow fermented education.

Image for post

For many years Google has recently led a basic focus on the development of both discriminatory privacy and practical discrimination-privacy mechanisms with a recent focus on machine learning applications (see, that, or this research). Paper). Last year, Google published its Responsive AI Practices, a description of our recommended practices for responsive development of machine learning systems and products; Even before this publication, we have been working hard to make it easier for external developers to apply such practices to their own products.

As a result of our efforts, today's announcement of TensorFlow Privacy and the updated Technical White Paper outlines a broader range of its privacy mechanisms.

To use TensorFlow Privacy, no specialization in privacy or its underlying mathematics is required: those who use standard TensorFlow mechanisms do not need to change their model architecture, training procedures, or procedures. Instead, to train the models that secure the privacy for their training data, it is often enough for you to change some simple code and tune the relevant hyperparameters with privacy.

Learning a language with privacy

As a concrete example of differentiated-personal training, let us consider the training of character-level, recurring language models in text sequences. Language modeling using neural networks is an intensive learning task, used in a myriad of applications, many of which are based on training with sensitive data. Based on the example code from the TensorFlow Privacy GitHub repository we train two models - in a standard way and with a different privacy - using the same model architecture.

Both models range from standard Penn Treebank training datasets to English language models in financial news articles. Do a good job However, if the slight difference between the two models is due to the failure to capture some essential, basic aspects of the language distribution, it raises doubts about the usefulness of the different-private model. (On the other hand, the utility of the private model may be better, even if it fails to capture some mysterious, unique details in the training data.)

o confirm the usefulness of the personal model, we can look at the performance of the two models on the corpus of training and test data and check the set of sentences in which they agree and disagree. To look at their similarity, we can measure their similarity in model sentences to see that both models accept the same basic language; In this case, both models accept and score higher (e.g., less anxious) data %% of training data sequences. For example, both models score high on the following financial news sentences (shown in italics, as they are clearly what we want to learn in distribution):

There was little trading and nothing to move the market

South Korea and Japan continue to be profitable

Commercial banks were powerful across the board

To see their differences, we can test the training data sentences where the scores of the two models are very different. For example, the following three training data sentences are accepted by high score and regular language models, for which they are memorized effectively during standard training. The different-individual model, however, scores very low on these sentences and does not accept them. (Below, the sentences are shown in bold, as they go beyond the distribution of the language we want to learn.)

My God and I know I am right and blameless

All of the above sentences seem like they should be unusual in financial news; Moreover, they appear to be sensible candidates for privacy protection, for example, such a rare, unique-visual sentence can identify or disclose information about individuals on models trained in sensitive data. The first of the three sentences is a long sequence of random words that occur in the training data for technical reasons; The second sentence part is Polish; The third sentence - albeit natural English - is not from the sampled language of financial news. These examples are hand-selected, but a thorough inspection confirms that training-data sentences not accepted by different-private models typically fall outside the general language distribution of financial news articles. Furthermore, by evaluating the test data, we can verify that similar Google phrases are the basis for the loss of quality between private and non-private models (1.1 vs. 1.1 per mis). Thus, even if the nominal distraction loss is around 6%, the performance of the private model can probably be reduced to the sentences we care about.

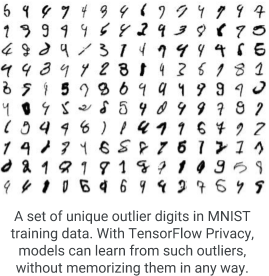

Clearly, at least in part, the differences between the two models come from the private model that failed to remember the rare scenes that were unusual in the training data. We can quantify this effect by using our previous task to measure unnecessary memorization in the neuron network, which deliberately incorporates random, random canary phrases into training data and evaluates the effect of canaries on the trained model. In this case, the inclusion of a single random canary sentence is enough to make that canary completely memorized by the non-private model. However, models trained with differential privacy cannot differ in the face of any single inserted canary; Only when the same random sequence appears many, many times in the training data, will the private model learn anything about it. In particular, this is true for all types of machine-learning models (for example, see the picture with rare examples from MNIST training data above) and it remains true even when the mathematical, formal upper bound in the privacy of the model is too large to offer any.

Guarantee in principle

Tensorflow privacy can prevent the memorization of such rare details and guarantee, as shown in the figure above, that the two machine-study models will be indivisible if certain examples (e.g. some user data) were used in their training.

The next step and further reading

To get started with TensorFlow Privacy, you can check out the examples and tutorials in the GitHub repository. Specifically, these include detailed tutorials on how to do MNIST benchmark machine-learning work with traditional TensorFlow mechanics, Technology Flow 2.0, and how to differentiate-personal training from Keras' new more curious approach.

Important to use TensorFlow Privacy, the new step is to set up three new hyperpammers that control the way the pattern is created, clipped, and shaken. During training, defensive privacy is ensured by optimizing models using modified stochastic gradient descents that are averaged together with multiple gradient updates inspired by training-data examples, clipping each gradient update to a certain maximum standard, and adding Gaussian random noise to the final average. This style of teaching binds the maximum to the effect of each training-data instance and ensures that no such instance has any effect, in itself, due to the added noise. It may be art to set these three hyperparameters, but the TensorFlow Privacy Repository contains instructions on how to select them for concrete instance.

We want to develop a hub of best-of-breed technologies to train machine-learning models with strict privacy guarantees for TensorFlow privacy. Therefore, we encourage all interested parties to engage, for example by doing the following:

In this or that blog post read about its application for variation privacy and machine learning.

For physicians, try to use TensorFlow Privacy on your own machine-learning models, and use a balance between privacy and usability by tuning hyperparameters, model capabilities, and architecture, activation functions, etc.

For investigators, the improved analysis attempts to advance the state of the art in real-world machine learning with strict privacy guarantees, e.g. Of model parameter selection.

Kubeflow project → https://goo.gle/2kmHqqh

Get started → https://goo.gle/2lWhTnY

Intro to Kubeflow Codelab → https://goo.gle/2kz6OJ8

Intro to Kubeflow Pipelines Codelab → https://goo.gle/2k8Ntyu

BigQuery ML → https://goo.gle/2m03F5r

PyTorch Homepage → https://goo.gle/2kHb4GQ

PyTorch on Google Cloud → https://goo.gle/2mgQihp

Colab → https://goo.gle/2mB89jn

Kaggle Kernels → https://goo.gle/2m8BqBZ

Deep Learning Virtual Machines → https://goo.gle/2m8AnSz

PyTorch on TPU mailing list → pytorch-tpu@googlegroups.com

Read about AutoML Tables at KaggleDays SF → https://goo.gle/2MqdV1V

AutoML Tables → https://goo.gle/31givUk

Responsible AI Practices → https://goo.gle/2qec9YN

GitHub → https://goo.gle/2Pxm5HL

Comments

Post a Comment

If you have any doubts. Please let me know.