Data science project using Kaggle Datasets and Kernels

Cooking a data science project using Kaggle dataset and kernel

We are working together to use fresh materials (data), to prepare them using different tools, and to work together with a delicious result - a published dataset and some quiet analysis that we are sharing with the world.

Working with dataset and kernel

We will pull public data from the city of Los Angeles open data portal, including environmental health violations from restaurants in Los Angeles. So we will create new datasets using the data, and work together on the kernel before releasing it into the world.

In this blog you will learn:

How to create a new, private, Kaggal dataset from raw data

How to share your dataset before making it public to those involved in your collaboration

Adding helpers to private kernels How to use helpers in Koggle kernels

Data is most powerful when it is reproducible code and shared with experts and the community at large. By placing data and code on a shared, consistent platform, you get the best of collaboration with the best products and high-performance notebooks.

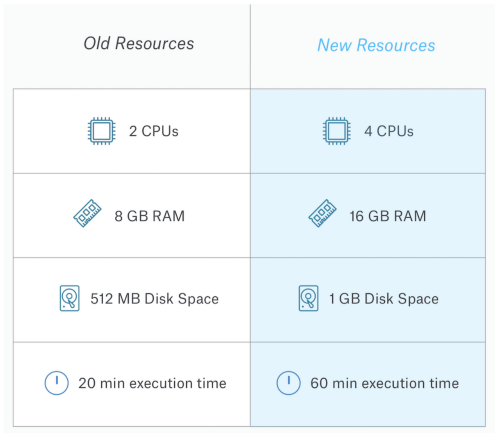

Resources

Now that you know how to create datasets and kernels, it's time to create your own! Go to Kagal today to start creating new datasets.

Megan Risdal is a product leader at Kaggle Datasets, which means she worked with engineers, designers, and the Kaggle community of 1.7 million data scientists to build tools to find, share, and analyze data. She wants to be the best place for Kagal people and to help them in their data science projects.

Get started with Kaggle → https://kaggle.com/datasets

Introduction to Kaggle Kernels → http://bit.ly/2z409xm

[Dataset] LA County Health Code Violations → http://bit.ly/2MFwyvO

[Kernel] Exploring LA County Health Code Violations → http://bit.ly/2KIBz6e

Comments

Post a Comment

If you have any doubts. Please let me know.