Walk-through : Getting started with Keras, using Kaggle

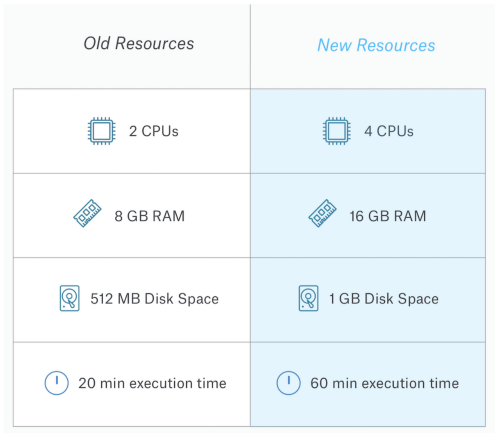

Walkthrough: Starting with Kegel, using Kegel This is a short post - the real substance is in the screencast below, where I go through the code! If you’re just starting out in the world of machine learning (and let it be real here, who isn’t?), Tooling seems to be getting better and better. Keras has been a major tool for some time and is now integrated into the TensorFlow. Good together, right? And it just so happens that it has never been easier to get started with Keras. But wait, what exactly is Keras, and how can you use it to start building your own machine learning models? Today, I want to show you how to get started with bananas in the fastest possible way. Canara is not only built on TensorFlow via TensorFlow.Caras, you don't have to install or configure anything if you use tools like the Kegel kernel. Introduction to Kaggle Kernel Find out what Keggel kernels are and how to get started. While not there ... Tirdatascience.com Playing around with Ker...