Serverless forecasts in measurement

Once we are happy with your trained machine learning model, how can we measure predictions? Find it in this part of Cloud Ann Adventure!

Google's cloud machine learning engine enables you to create prediction services for your TensorFlow model without any option work. Get more time to work with your data, from a trained model to a diploma, auto-scaled pred forecast service in minutes.

Service Predictions: The Final Step

So, we have gathered our data, and finally finished the training of the appropriate model and verified that it performs well. We are now ready to finally go to the final stage: serving your prophecies.

In the challenge of presenting forecasts when we want to modify an objective-built model for service. In particular, a fast, light-weight model is stable because we do not want any updates during service.

Additionally, we want our prediction server to measure up with demand, which adds another layer of complexity to the problem.

Exporting TensorFlow models

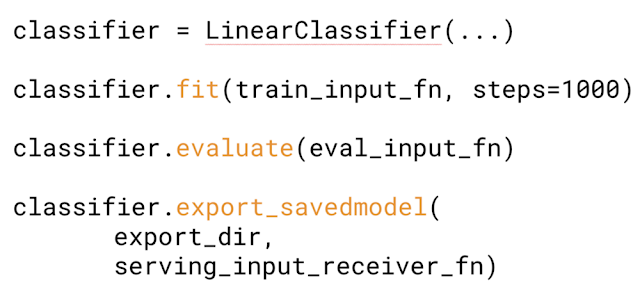

It turns out that TensorFlow has a built-in function that can focus on producing customized models to present predictions! It handles all the necessary adjustments, which saves you a lot of work.

The function we are interested in is called the Export_Save Model (), and once we are satisfied with the status of the trained model, we can run it directly on the classification object.

It will take a snapshot of your model, and export it as a set of files that you can use anywhere. Over time, as your model improves, you can continue to produce updated exported models, thus providing your version with multiple versions over time.

Exported files are made up of one file and one folder. The file is the save_model.PB file, which defines the model structure. The variable folder holds two files, which are supplied with the trained weight in our model.

Serving a model in production

Once you have the exported model, you are ready to serve it in production. Here you have two primary options: Use TensorFlow Servicing, or Cloud Machine Learning Engine Prediction Service.

Serving TensorFlow is a part of TensorFlow and is available on GitHub. This is useful if you configure your product infrastructure and enjoy the demand for pleasure.

As far as, today we will focus our attention on cloud machine learning engine forecasting service, even though they have a similar file interface.

The Cloud Machine Learning Engine allows you to take the exported TensorFlow model and replace it with a built-in API endpoint and auto-scaling with the default service that goes all the way to zero (oops no calculation fees when no one requests a forecast!).

This feature is also complete with rich command-line tools, APIs, and UIs, so we can interact with it in a variety of ways depending on our preferences.

Delivering a new forecast model

Let's take a look at an example of how to use the Cloud Machine Learning Engine prediction service with our Iris example.

Export and upload

First, run the Export_Save Model () on our trained classifiers. It produces an export model that we can use for our forecasting service.

Next, we want to upload files to Google Cloud Storage. The cloud machine learning engine will read from the cloud storage when creating a new model version.

Be sure to choose a regional storage class when creating your bucket to make sure your computer and storage are in the same area.

Create a new model

In the Cloud Machine Learning UI, we can create new models, which is really a cover for all our released versions. The versions capture our individually exported models, while Model Abstract helps drive traffic to your favorite suitable version.

Here we are in the model list view, where we can create new models. Name it all it takes to create a model. Let's call our Iris_model.

Create a new version

Next, we will create the version by selecting the name for that particular version, and notifying it to our cloud storage directory that holds our exported files. And so, we've created our own model! All we took was pointing the service to our exported model and naming it.

How can it take so little work? Well, the service handled all the operating aspects of setting up and securing the last point. In addition, based on demand, we do not have to write our own code for scaling. And because it's a cloud, this elasticity means you don't have to pay for unused computers when demand is low.

By setting up a forecasting service for our Iris model that does not require any ups work, we have been able to switch from a trained model to a Deployment, independent-measurement forecasting service in minutes, which means more time to go back. To work with our data!

Thanks for reading this version of Cloud AI Adventure. Be sure to subscribe to catch future episodes externally.

If you want to know more about TensorFlow Serving, check it out at the TensorFlow Dev Summit earlier this year.

Links

Comments

Post a Comment

If you have any doubts. Please let me know.