Estimates Repeated: Deep Neural Network

As the number of features columns in a linear model increases, it can be difficult to achieve high achievement in your training, as the interactions between different columns become more complex. This is a known problem, and a particularly effective solution for data scientists is to use deep nerve networks.

Why go deep

Deep neural networks are able to adapt to more complex datasets and better generalize to previously unseen data because of its main layers, which is why they are called deep. These layers allow them to fit more complex datasets than liner models. Although the trade-off is that the model will take longer to take the train, will be larger in size, and will have less explanation. So why would anyone want to use it? Because it can take a high final amount.

One of the hardest things about deep learning is that all the parameters are “correct”. Depending on your dataset, these configurations seem virtually unlimited. As far as TenserFlow's built-in Deep Classifier and Resistor classes offer a practical set of predefined values that you can start right away, allowing you to move quickly and easily.

From linear to deep

Let us look at an example of how to update the iris instance from the graphical model to the deep neural network, often abbreviated as DNN.

I don't show the 2000-column model that DNN can live with ... so I'm just going to use the ones we used in this series. (All or nothing, isn't it?) The mechanics are the same in any way.

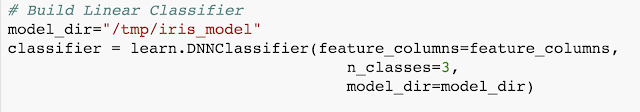

The main change comes from replacing our LinearClassifier class with DNNClassifier. It creates a deep neural network for us.

Other changes

Almost everything stays the same! There is one more parameter that is required for a deep neural network that we did not include earlier.

Since deep neural networks have multiple layers, and each layer possibly has a different number of nodes, we will add a hidden_unit argument.

The Hidden_units argument allows you to supply an array with the number of nodes for each layer. This allows you to create a neuro network based on its shape and size, rather than having to wire the whole thing from scratch by hand. Adding or removing layers is as simple as adding or removing elements to an array!

More options

Of course with any pre-built system, what you get at the convenience you often lose in customization. You can use DNNClassifier alternatively by adding several additional parameters to get around this. When released, some practical defaults are used. For example, the optimizer, activation function, and dropout ratio are all available to customize with many others.

And that's all

What else do you need to do to transform our model from line to deep? Nothing! That's the beauty of using the Estimators Framework: a simple way to organize your data, training, evaluation, and model exports, while still giving you the flexibility to use different models and parameters.

An easy way to go deeper

Sometimes, deep neural networks can outperform linear models. In these cases, TensorFlow makes it easier to switch from a linear model to a deeper one with very little code change, using a hypothetical framework to replace only one function call. This means more time working on your data, models, and parameters, than training loop-up wires. For easy deep neural networks, use tensor flow estimators!

Comments

Post a Comment

If you have any doubts. Please let me know.